Trusting AI when the stakes are high

Raytheon Technologies developing explainable AI systems

When visiting a new city, a GPS app is a must have. But when drivers are travelling in their own hometowns, they often disregard their GPS’s directions, believing they know the best route. It’s a matter of trust.

Drivers don’t understand how their app arrived at its conclusion, putting more faith in their own instincts and sense of direction instead of a machine’s algorithm. As artificial intelligence and machine learning becomes more prevalent in society , trust and explanability are critical for its widespread adoption, especially in high-stakes environments, such as healthcare, national security and weather prediction.

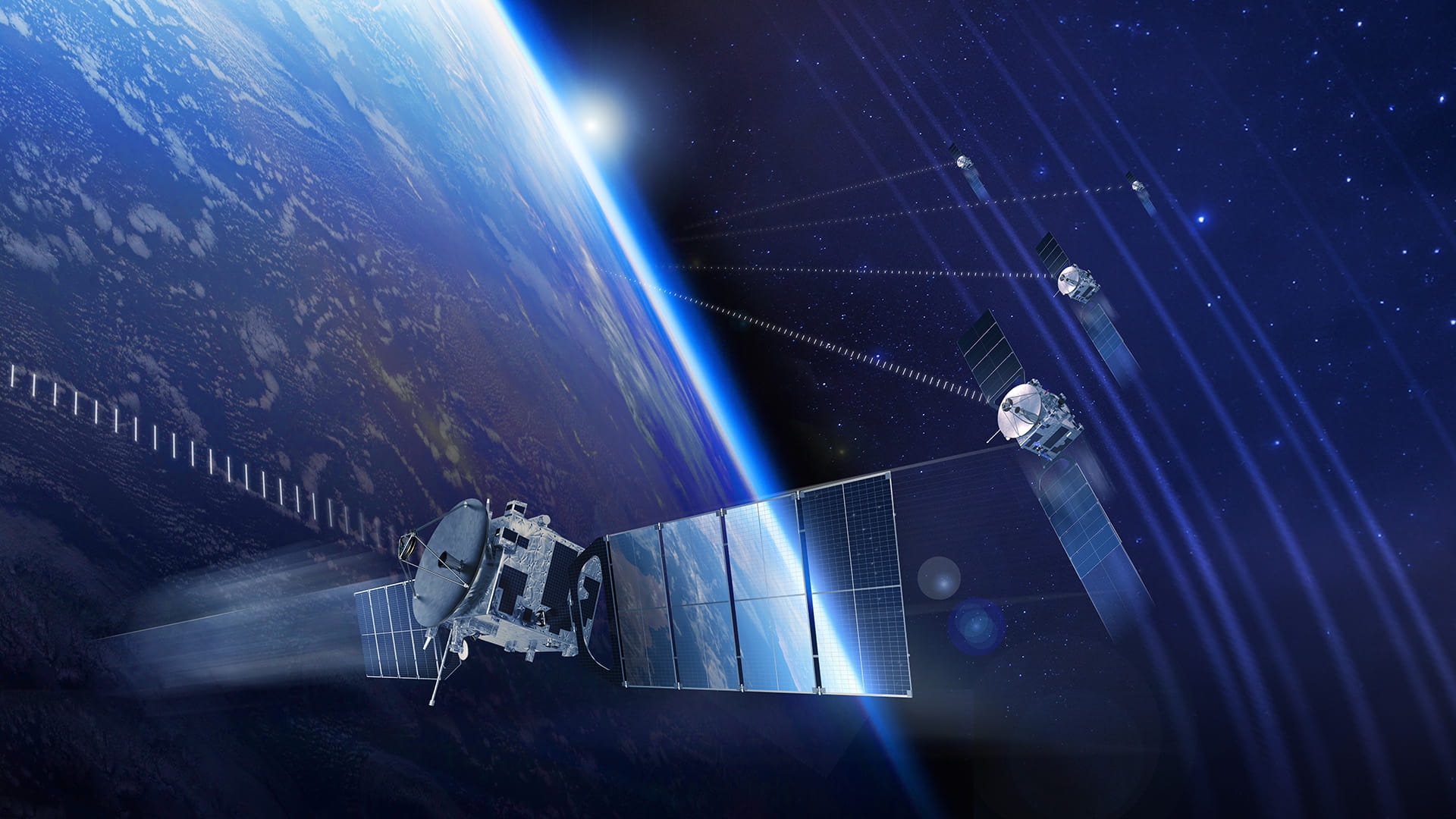

Raytheon Intelligence & Space, a Raytheon Technologies business, is developing artificial intelligence and machine learning systems for space systems, cyber protection, the intelligence community and military that are transparent, explainable and reliable. AI systems that their customers can trust.

“I trust navigation systems, because I know how they work,” said Fotis Barlos, vice president of Analytics and Machine Intelligence at BBN Technologies for Raytheon Intelligence and Space. “A few times, I tried to outsmart Google Maps and I paid for it.

He added, “It’s the high-consequence missions where AI must prove its competence and trustworthiness, which is something that we’re doing at Raytheon Technologies and BBN.”

One area Raytheon Intelligence & Space specializes in is collecting, fusing and processing petabytes of data for the intelligence community and military, providing them with actionable information so high-stakes decisions can be made quickly. Without AI/ML, the mountains of images streaming down from its space-based sensors would require an army of imagery analysts and hours of time to sift through. Time and manpower that the national security professionals can’t afford.

“With machine learning, we are teaching AI to look at imagery and flag which images that an operator may need to pay attention to,” said Jack Allen, C2 Digital Solutions chief engineer for RI&S.

This human-machine teaming will cue up items of interest for analysts instead of them having to manually cull through screen after screen of useless images.

“One hundred images may be nothing but a blank desert, but one of them might have a missile in it,” Allen said. “AI can find the one.”

AI can recognize patterns in images, as well as shifts in activity. Then it can rapidly characterize the data, classify and identify objects, inform models and present human analysts with intelligence that predicts potential future outcomes.

Raytheon Intelligence & Space can also use AI’s ability to recognize patterns to help weather forecasters at the National Oceanic and Atmospheric Administration. Despite the chaotic nonlinear characteristics of hurricanes, NOAA accurately predicted the path of Hurricane Ida with the aid of AI/ML.

“NOAA uses a technique called ensemble forecasting where they use multiple models, multiple options and slight variations as well as historical data that we’ve collected over the years to predict the path and the strength of the storm,” Barlos said.

The same sort of ensemble modeling is being used for the 2021 California wildfires.

“Our sensors combined with AI could help identify escape routes and ML can optimize looking at fire patterns, where they're going and how fast they're going to get there,” said David Appel, vice president of C2 Digital Solutions for Raytheon Intelligence & Space. “It can identify and reroute first responders to be able to rescue men and women who are at risk.”

Repairing satellites before they fail

Satellite telemetry analysis tools, which report the health of satellites back to the ground station, were designed to work in real time – but they struggle to predict problems. To overcome system limitations of the Joint Polar Satellite System, Raytheon Intelligence & Space partnered with C3.ai, a leader in artificial intelligence and machine learning. The team created a predictive anomaly detection capability in the software to flag potential problems so operators can intervene and prevent disruption to the mission.

The capability uses a machine-learning algorithm to monitor the performance of battery-powered systems on low Earth-orbiting satellites that take weather and climate measurements for the U.S. government.

Such algorithms need a lot of data to learn from – and in this case, they had plenty.

“Four years and nearly 3.7 billion records of historical telemetry data were used to create the detection algorithm for this pilot,” said Anthony Bush, director of Civil Space and Weather at RI&S. “Feeding legacy data from a known event into the AI software enabled us to establish a baseline for success and prove out our hypothesis.”

Based on the results from the project, the team estimated a potential 50% increase in vehicle engineer efficiency in predicting problems and addressing them in advance.

“That’s the power of AI,” Appel said. “We can solve problems before they even appear. And, by keeping systems online and available, agencies can potentially save millions of dollars per satellite.”

According to Allen, this predictive maintenance AI identified a potential problem on JPSS; however, the program’s engineers needed to confirm it by doing the math first.

“They wanted to make sure the machine was correct,” he said. “It’s what we call explainability.”

With human-machine teaming, customers with high-consequence missions want to ensure that when they’re employing machines to assist them that they understand what went into the machine's decision.

“The field of explainability is going to be critical to sealing early adoption because simply turning over what you've always done yourself to something that you didn’t build, that you don’t understand, there's a trust factor that doesn’t exist,” Allen said.

Trust in the machine

Raytheon Intelligence & Information Systems and BBN Technologies is working on a project with the Defense Advanced Research Projects Agency to help build that trust factor.

They’re developing the first-of-its-kind neural network, a brain-like system of AI, that explains itself. Raytheon Technologies' Explainable Question Answering System will show users which data mattered most in the artificial intelligence decision-making process. Users can ask the system questions about chosen recommendations and discover why it rejected others.

"We know why humans may mess up a decision. There's no intuitive way to know when machines are wrong," said Bill Ferguson, lead scientist and EQUAS principal investigator at BBN Technologies. "To build trust, we have to give the user enough information about how the recommendation was made so they feel comfortable acting on the system's recommendation."

Built on hundreds of millions of data sets, EQUAS uses pictures to explain itself. A heat map lights up on a picture to show which characteristics influenced its decision. If the user asks why it came to the conclusion it did, the system uses words to explain its logic.

“A fully developed system like EQUAS could help with decision-making not only in DoD operations, but in a range of other applications, like campus security, industrial operations and the medical field,” said Ferguson. “Say a doctor has an ultrasound image of a lung and wants to validate if there are shadows on the image, which may indicate cancer. The system could come back and say, yes there are shadows, and based on these other examples, we should investigate this diagnosis further.”